Applied Bayesian Statistics

A study guide with causal thinking (WIP)

Once we have a confident grasp of the fundamentals, we continue on the path of applied Bayesian statistics. It is an extremely flexible and composable approach to building explainable models of increasing complexity and realism. We will learn new tools to deal in a principled way with missing data, measurement error, sampling biases, nonlinearities, discreteness, dynamics, and heterogeneity.

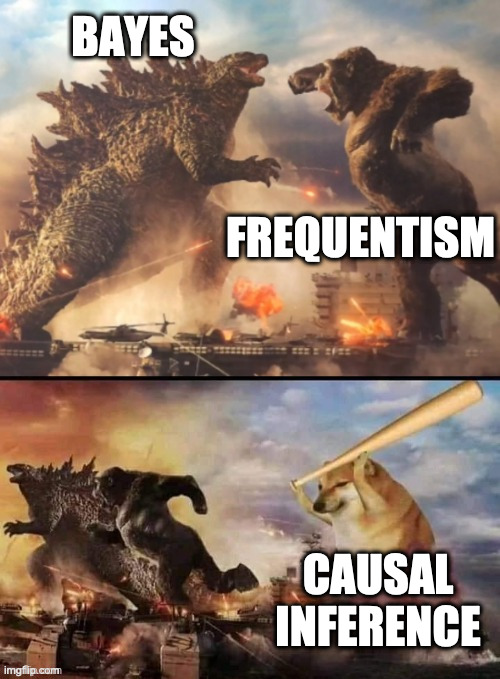

Instead of applying an out-of-the-box model, we will build custom ones for each individual application in an iterative way. Choosing appropriate probability distributions and modeling assumptions will be critical in this process, along with model critique and validation. There are many ways to teach bayesian statistics and generalized linear models, but I think R. McElreath has the best approach, by giving a lot of importance to DAGs of influence, simulation, and ideas from causal inference.

Many experts in the field argue that this should be the default way we do regression analysis and that we need a strong justification to show that a simple linear regression is the appropriate model.

This modeling approach will enable us to overcome the limitations of simpler models encountered before. Handling correlated and multilevel data is essential to make efficient inferences and draw correct conclusions.

One notable difference between the Bayesian approach and the traditional way advanced econometrics is taught, is that we will focus on computation instead of proofs and heavy-duty mathematical statistics. In a sense, it means that we work with a single estimator which updates our prior beliefs about parameter distributions. We declare the model and the sampler does the job for us! If it explodes, we probably mis-specified the model or priors.

The study guide for the third module was incredibly hard to put together, since there is so much content on the web and it’s so hard to strike a balance between clarity, simplicity, and the use-case being interesting, realistic. The most important criteria was for the case-study or resource to have code, data, and explained theory or methodology in a freely available e-book.

Often, you will have to combine various aspects of an use-case, model, method – from different sources, taking the best from each author.

I made sure to include interesting examples and archetypal applications for each statistical tool and theoretical topic, so you can immediately apply the concepts you read about or saw during the lectures. However, the responsibility to practice falls entirely on the learner – I can just do my best to make your journey less frustrating.

Introduction to Bayesian Statistics

After the first two modules, we should have a solid foundation for building more realistic models. As discussed in the introduction, randomized experiments and A/B tests have obvious limitations: might be unfeasible, unethical, or just not the right tool.

We are not wasting time by switching to Bayes (7 lectures to get to GLMs), because we treat all models under the same framework.

Instead of taking regression as the starting point, then going into advanced econometrics – I prefer we switch to a Bayesian perspective, so we can build custom models taking into account the particularities of the phenomena of interest. The course culminates in Hierarchical GLMs, which should be sufficient for the majority of problems you encounter in practice, or at least a good first attempt.

We start simple, by modeling a single random variable \(Y\), choosing the appropriate distribution for each phenomenon, a conjugate prior for the parameters, doing simulations – then sampling from the posterior with pymc, numpyro, or bambi.1

1 The R equivalents would be stan and brms, rstanarm. The latter two are a high-level API for most common models.

Limiting? Yes, as in reality we care about the relationship between random variables. However, we can get a lot of insight from thoughtful modeling of the data generating process, which will serve as building blocks in more complicated and realistic models.

In the second module, we applied Bayes rule and got some insightful results in three totally different domains. However, we weren’t doing neither statistics, nor inference – but got into the right mindset. Now it’s time for full-luxury Bayes!

I know, I know, the coin-tossing – simple, yet fundamental and found anywhere there is a binary outcome \(Y_i \in \{0, 1\}\). There are many ways to estimate the success probability \(\theta\), when we observe \(k\) successes from \(n\) trials.

- In Bayes’ Rules is a detailed exposition of the theory, with examples about Michelle’s election support and Milgram’s experiment.

- Share of biking traffic in different neighborhoods (BDA3, Ch2, Pr. 8)

- A/B Testing for proportions in BMH. Just remember that experiment design is much more nuanced than performing such inference or a test.

- Political attitudes in 2008 Election data (BDA3, Ch2, Pr. 21)

- Proportion of female births, given prior information. (BDA3, Ch2)

There are many more applications, but the ones below require more research and work. They are open ended and you can take these topics very far.

- The debate on the hot hand phenomenon is not yet over. Here are the bayesians weighting in and some new research.

- Basketball shot percentages and true shooting, brilliantly explained by thinking basketball in a playlist about NBA statistics.

- Important problem in ecology: estimating size of population based on samples (BDA3, Ch3, Pr. 6). The challenge is that in \(Bin(\theta, N)\) both parameters are unknown. Here is an old paper.

- Confidence intervals and lower bound for reddit posts like/dislike ratio. Read more about simple ranking algorithms and the issues of sample size: reddit, hacker news. It is a good opportunity to work with the reddit API in order to collect data about posts and comments.

The next step is learning how to model count data, which will open up to us applications of a different flavor. It is not a coincidence that when learning linear regression, we will extend it to poisson and logistic regression.

Note that prior choice and justification is an art and science: you will have to learn and practice how to articulate assumptions and encode your domain knowledge into the priors. There is no universal recipe, but there are some guiding principles.

Counts of independent events in a unit of (space/time/…), with a low probability. You can review the maths here. Below is a list of applications you can practice on:

- Deaths by horses in Prussian Army. Here is the historical data and a blog post if you need a refresher on Poisson distribution.

- Asthma mortality (BDA3, Ch2)

- Airplane fatal accidents and passenger deaths

- Estimating WWII production of German tanks based on samples captured

- Comparing football teams and goals in football matches

- Comparing birth rates in two hospitals

Check out the link functions for more sophisticated models. Also, in the examples above, we estimate the groups separately (corresponding to no pooling) – there are better ways. Also, you will see a poisson example of how to take into account the sample size

The next examples are a little detour, to appreciate the flexibility of the modeling approach we’re taking. We’re building upon previous models, by inferring which rate \(\lambda_1\) or \(\lambda_2\) is the most plausible at a given point in time. This way, we add complexity and realism to the model, by incorporating knowledge about the phenomenon we’re interested in.

Estimating rates, modeling a structural shift/change is a relevant, challenging, and unsolved problem in many fields. The models below are too simplistic to be useful in practice, but they capture the essence of real dynamics: things change not only continuously, but also structurally.

- Coal Mining disasters, pymc

- Text Messages, pymc

- U.S. School Shootings, is there an increase in attempts and occurences?

We already worked with multiple parameters, even touching upon the relationship between two variables: counts and time \(Y_t\), but not really – it’s more helpful to think about that in terms of stochastic processes. Therefore, we need a new tool, which is a link function, a nonlinear transformation \(g(x)\) which maps \(X\) to the correct support of \(Y\). I recommend to introduce this before jumping into gaussian linear regression (LR), in order not to have the (flawed) impression that the LR is the only game in the town.

This is an appropriate point to introduce linear regression and logistic regression. It is not too early, given the importance of those tools, however the presentation should be practical and pretty high level, as there is much nuance to deep-dive later.

Of course, we cannot forget about the Normal/Gaussian distribution, which is so prevalent in nature and pops up everywhere in the statistical practice. It is the building block of many following models. Remember the key idea of the expectation of independent random variables and estimating the mean from samples. Also, keep in mind any time you’re doing regression that it’s all about the conditional expectation \(\mathbb{E}_\theta[Y|X=x]\).

- You can find the theory and mathematical exposition in Bayes Rules, with a case-study of football concussions study

- Speed of light experiment (BDA3, Ch3), data. You will find here and in any statistics textbook the cases of known and unknown variance, and how the \(t_k\) test is derived from the first principles.

- As in the case of proportions, we can use the model above to model the difference between the means of two independent groups.

Multiple Groups and Hierarchical Models

We already worked with groups in the case of difference in means (proportions and continuous variables) and making inferences for three and more groups, treating them as separate. We will see that such an approach is called “no pooling”.

In the traditional teaching of statistics, the above would be covered by t-test, tests for proportions, and when it comes to groups, by ANOVA. If you were lucky, these would’ve been treated as particular versions of linear regression, like in “Most common statistical tests are linear models”.

One more reason for this is that groups and categorical variables do not receive the deserved, nuanced exposition in linear regression. Also, comparing groups is so widespread, that having a tool to deal with the challenges which it poses is immediately useful in your practice inside any firm.

In this section, the goal is to show the idea of hierarchical models and partial pooling. I agree with BDA3 (Bayesian Data Analysis 3ed) approach to teach it before regression, as the latter needs a lot of nuance and a long time to learn to apply properly.

There is no point in repeating all from the first section, as it is straightforward to apply for groups, as you’ll see when we compare it with a hierarchical approach.

However, it is a good chance for a frequentist detour, to the Agresti-Coull confidence intervals, \(\chi^2\) tests of independence, and nonparametric tests for proportions in multiple groups.

- Erica Ma has a great talk for hypothesis testing for 2+ groups.

- I think the authoritative resource on Bayesian versions of the distribution-free methods for hypothesis testing is “Bayesian Statistics for Experimental Scientists”, found here. Unfortunately, it is expensive, so I suggest you look at the table of contents and search for the implementations elsewhere.

I build upon the module 2 on A/B testing by introducing Multi-Armed Bandits. There are cases in which we are testing multiple groups, e.g. which images to show on the website, and we do it at scale. Moreover, we want to experiment countinuously and automatically, trading off between exploration and exploitation to maximize the payoff.

I suggest you start from the didactic examples in Bayesian Methods for Hackers, Chapter 6. If you have an use-case in which this fits perfectly, you can check out the theory and more variants to implement it in more specialized resources.

We encountered the problem of sample size when ranking barbecue diners and reddit posts, but it deserves a few lectures in itself. Once you master a few methods of reasoning about \(n\), you can cut through so much bullshit in media, research, and literature.

For continuity with the previous “Building Blocks” section, here are a few examples for groups, where the dependent variable is following the Poisson distribution. In this case, the novelty is choosing a gamma prior based on the sample size information of each group. If you think we can do better than this trick – you’re totally right.

The models become more complicated as we attempt to estimate parameters for each group of \(n\) observations:

- Kidney Cancer rates, with priors chosen in accordance to the sample size (BDA3, Ch2). An R visualization

- Guns and suicides, with ideas from empirical and hierarchical Bayes.

Complete pooling is when we ignore the fact that we have groups, and make one, global estimate. Of course, it would fall in the category of underfitting or model mis-specification, if the categories or groups are relevant.

Finally, we’re ready to see how partial pooling and hierarchical (multilevel) models are such an important and powerful innovation, to the point where some practitioners argue (and I agree), that it should be the default way of (regression) modeling. Meaning, a strong justification is needed why your model doesn’t need that structure or modeling of heterogeneity. Keep in mind this advice, but always start with the most simple models when iterating towards the final one.

The main example is a classic (gaussian): modeling the level of radon in houses on the first floor and basement, where the groups are counties (BDA3). We will see this example again in the Hierarchical GLMs section, where predictors at house-level and county-level are added.

- Omar Sosa - Practical introduction to Bayesian hierarchical modeling with numpyro, focuses on exactly the idea we need.

- Primary code reference: pymc - A Primer on Bayesian Methods for Multilevel Modeling

Beta-Bionmial examples:

- Eric Ma tutorial at pycon, about baseball batting, and the equivalent numpyro code. Another baseball example is a classic by Efron, implemented in pymc.

- Police shooting training – detecting race bias. A full Bayesian workflow in bambi.

- Another classic example is about Rat Tumors experiment, implemented in pymc, from BDA3, Ch5.

A great Gamma-Poisson example is presented by Richard McElreath in Statistical Rethinking lectures: “Starbucks coffee-shops waiting time in queue”.

One of my favorite business applications of the ideas outlined in this section, is in the seemingly innocent problem of computing the lifetime value of a customer. Just to show how tricky this problem is, there is a niche, but extensive literature on the probem, starting from 2000-2005 by Rossi, Fader, and Hardie, which is entering into the mainstream only now, by 2020-2023.

The below is by far not the only model: there are versions for contractual and non-contractual settings with different assumptions. The culmination of this research, in my opinion, is Eva Ascarza’s 2013 paper.

Model: Combining Gamma-Poisson and Beta-Binomial, with parameters at customer level. The math and code in pymc3 can be found here.

Regression and Bayesian Workflow

I think most practitioners and educators will agree that Linear Regression is the most important tool to master in statistics. I mean it in the most general sense – not only knowing model details, assumptions, extensions and limitations, but how to use it effectively in practice in an end-to-end workflow.

I highly recommend the (freely available) book “Regression and Other Stories”, by Andrew Gelman, Jennifer Hill and Aki Vehtari. The latter has a course website for this book, with the examples coded in stan.

Also, the second resource, which I would say is even beter, takes on the perspective of causal inference from the very beginning of studying regression. In the course homepage you will find links to the pymc port, slides, and video lectures from 2023.

- Eugene-Springfield community sample data: OCEAN personality traits as related to drug usage – no categorical variables, \(Y\) distribution normal-ish. Demo in bambi.

- Educational outcomes for hearing-impaired children, in pymc

- Dangers of spurious correlation and accounting for them are displayed in the marriages and waffles example by McElreath – written in numpyro, but there is also a pymc version.

- pymc moderation analysis: muscle mass and age

- This case study about 100m runs from Calling Bullshit, shows the dangers of extrapolation beyond the range of \(X\) that the models were trained on.

I have a third favorite book, which is freely available, called “Beyond Multiple Linear Regression”. It covers all that we discuss here, but from a frequentist perspective. Despite that, the examples are amazing and it has enough of theory to make it a self-contained resource – therefore, makes a perfect candidate for a port in pymc/numpyro. For a review of linear regression, take a look at the Kentucky derby horse race.

The linearity assumption in linear regression is often under-emphasized, as pointed out by the trio of RoS in a podcast episode. Linear Models are linear in parameters, but we should always think of the appropriate transformations of \(y\) and \(X\).

By introducing splines and nonlinear transformations, we can easily increase the dimensionality of \(X\), with respect to \(n\) – which causes massive problems for inference. This is the appropriate moment to introduce a fundamental tradeoff between model complexity and out-of-sample predictive performance.

Regression and Model Critique

Up until this point we took the sampling from the posterior provided by the probabilistic programming languages for granted, as magically converging to the true distribution. We also used very rudimentary methods of comparing models (via Bayes Factors).

However, things go wrong in practice all the time: poorly specified and parametrized models lead to computational problems. It is important to be able to diagnose the chains, and even more, actively critique and interogate your models. At some point, you have to get an understanding of what MCMC, HMC does under the hood – and when you would trade off the accuracy for faster, approximate inference.

Checking domain and modeling assumptions, critiquing the models we build are one of the most difficult aspect of statistical practice. It is hard to give a tutorial for this, so I just suggest you read Richard McElreath’s Statistical Rethinking chapters on this topic, along with the more theoretical BDA3, Part II.

- For an intuitive process which can structure your modeling and critique, I suggest McElreath’s “Drawing the Bayesian Owl”.

- Chapter 2 of Bayesian Computation in Python, by Osvaldo Martin (BCP) is one of the best at exemplifying these concepts.

- Prior and posterior checks pymc docs

- An end-to-end example in BCP, modeling the flight delays.

The frequentists and statistical learning approach have well-established tools for asessing the out-of-sample predictive performance. The fact that training is fast, they can easily use cross-validation and even bootstrap. A recent innovation in Bayes is LOO-PIT, an approximation to leave-one-out cross-validation, which leverages the fact that we have posterior sampling.

- A reminder of bootstrap, that is what the frequentists might do - chapter 10 or page 249. It is a powerful tool to have in your arsenal anyways.

- Here are the technical details of LOO-PIT, in the context of model comparison.

Regression and Model Selection

Once we are able to critique and evaluate a single model, it makes perfect sense to compare models of increasing complexity and with alternative assumptions. The following uses the same tools as before, but in a iterative workflow.

- pymc. - Model selection with fake data and polynomials

- Chapter 3 and 4 of BCP are beautiful, where linear and logistic regression are introduced, along with questions of interactions, nonlinear transformations, hierarchical models and model choice.

There are cases when the problem is in sampling and one common cause, besides mis-specification, are models with bad posterior geometry (from the perspective of samplers). As a rule of thumb – “round and smooth” is good. A prevalent solution in literature is to use better parametrizations, but in order to fix your problem, you first have to be aware that it might be the case.

- Technical: (mu, sigma) - Neal’s Funnel numpyro, there is equivalent pymc

- More solutions in numpyro for bad posterior geometry

These examples in 2D are intuitive, however things become much more complicated and counterintuitive in multidimensional parameter spaces. The long story short, in practice, is to use heavier regularization (via stronger priors) in those cases.

A big topic in ML is regularization and encouraging sparse solutions (models). The point is that in highly-dimensional spaces, only a small subset of variables is relevant. Bayesians have sneaky methods of constructing priors which act as a mechanism for variable selection.

There is an additional topic of robuts methods (to outliers). Some machine learning models are robust(ish) out of the box: quantile regression, gradient boosted trees. Bayesians use heavier-tailed distribution to achieve a similar effect.

- Spike and Slab example on Kaggle

- Horseshoe prior implemented in pymc3

- Robust linear regression on simulated data, implemented here, in pymc/bambi.

Regression and Nonlinearities

I can’t resist to suggest a case-study from finance: the good old portfolio returns optimization. The methods used in practice are much more sophisticated than the below, but it’s useful to be aware of the fundamentals. The Wishart distribution, used as a prior for positive semi-definite matrices (covariation) can be an useful building block in more complicated models to capture the covariation structure, e.g of different time series.

See the example with Wishart prior and portfolios from BMH. We could do an optimization on point estimates, but this aprroach is more general, capturing the uncertainty in estimates.

If you got to this last topic on linear regression, months probably have passed. There is a good reason universities dedicate entire semesters for regression – there are so many nuances to get right: from both practical and theoretical perspective. One of these complexities is how to handle missing data, which deserves a course on its own.

In Bayesian Statistics, we also have to be very explicit and declare our assumptions about how the data went missing – which is a hard, but beneficial thing to do. The good news – missingness is treated like an unobserved variable and is subject to the same inferences we’ve been doing before. We just need to pick the right model of missingness for each individual case.

- Missing data imputation, pymc, both for linear and hierarchical

- Discrete missing data imputation numpyro, with simulated data and causal graphs

- Pymcon - missing data tutorial in pymc3

- Missing Data Imputation

Generalized Linear Models

Not every phenomena we model is continuous or appropriate for gaussian linear regression, but we can easily extend it with the building blocks we learned at the beginning. It’s all about those link functions and relaxing some of the assumptions of the linear regression. I want to point out how the following mirrors the simple, single-variable models we first practiced on.

As a theoretical background of what connects these models into one single family, hence the name of GLMs, I suggest you read the section in the “Beyond MLR” about the exponential family. Richard McElreath has similar information-theoretic arguments in his lectures on Statistical Rethinking.

As a fun aside about machine learning, logistic regression is the only model which is calibrated out-of-the-box, meaning the scores for the classification can be interpreted as probabilities, due to its specific loss function.

For the rest of the models (e.g. random forests), you would need to calibrate via an isotonic or piecewise-linear regression, on a separate, validation dataset. So, going back to the practice, here are some examples using the logistic regression:

- Beetles survival by concentration of chemical, via bambi – tries out different link functions and is a simple example to get started with.

- Vote intention by age in the U.S., via bambi, 2016 ANES data, 1200 samples.

- Socio-economic influences on income bracket (\(\ge \$50k\)), also does model comparison, implementation in bambi

- Multinomial regression on the iris, the most boring dataset, but you’ll see that you have a solution for the multiclass classification without the multiple comparisons.

When you have a numeric target variable, think twice if it is appropriate for the gaussian distribution. Sometimes, what we model is really, counts, or non-negative, or monotonically increasing with respect to a \(X_i\) (that alone deserves its own case-study). Sometimes, even poisson doesn’t work out due to overdispersion or zero-inflation, and it is not a rare exception, especially when modeling aspects of customer behavior.

- Number of laws regarding equality, which includes a discussion for the issue of overdispersion. Not sure at all that this would be a prototypical DGP story for a Poisson.

- Stop and frisk data from BDA3 and RoS, the frequentist version

- Campus crime and estimating household size in Philippines from BeyondMLR.

- Alcohol and meds interaction, with simulated data.

The negative binomial distribution is often found in customer behavior, in contractual or non-contractual settings, when it comes to purchases, subscription renewals.

- Cockroaches and pest management, where Negative-Binomial, Poisson and Zero-Inflated NBD is investigated.

- Fishing catches in a park, in numpyro

- Students’ absence, UCLA data, application of negative binomial, written in bambi

In the probability fundamentals section, we discussed the use-case of estimating proportions and named the field of compositional data analysis. It is often found in practice, but disguises itself, which causes the wrong method to be applied.

Dirichlet regression, pymc3 - fake proportions dataset, but take some real ones from compositional data analysis books

Introduction to Hierarchical Models

We finally reached the most exciting point in our journey so far, where we can properly model and explain sources of variation at multiple levels (of analysis). This is where we relax the assumption of \(i.i.d.\), replacing it with one of exchangeability.

The point is that correlated data causes problems in modeling when we don’t account properly for it: be it groups, clusters, categorical variables, time series, geography, etc. BeyondMLR has a very well thought, practical motivation for multilevel models.

We come back to the radon example, by adding one covariate at each level: house and county. This explains a part of variation which was missed before and leverages pooling to take care of low sample size in some counties.

- Radon: Primary code reference: pymc - A Primer on Bayesian Methods for Multilevel Modeling

- bambinos a higher level API, models the log-radon. Alternatively, McStanPy implementation

- Omar Sosa - Practical introduction to Bayesian hierarchical modeling with numpyro

- Bayesian Multilevel Regression numpyro on OSIC Pulmonary Fibrosis Progression data, which assesses the risks of smoking and not only.

- BeyondMLR presents a really interesting psychological study about stage anxiety for music performers at a conservatory.

- A repeated analysis of Stack’s facial feedback hypothesis, in the context of replication crisis, via bambi – full workflow.

Hierarchical Models and Longitudinal Data

Longitudinal data is a special case of multilevel models, but has the distinct feature, that at the group level, the data isn’t iid, but comes as a realization of the stochastic process. Often called Panel Data in economics and social sciences. You will see a different terminology in that literature: of mixed or multilevel models.

These examples model the trend with a covariate, as a function of time – which is a happy case when we can do that. However, in practice, things become more complicated if we have to model and identify a stochastic process like \(AR(k)\), or \(MA(n)\).

- Longitudinal data with drinking teens, alcohol consumption per cohorts, pymc.

- Sleep study and reaction times in time by subject. The same modeling idea is in pig growth study, both implemented in bambi.

- Beyond MLR charter schools longitudinal study.

If in the previous sections, I didn’t have a strong preference for the Bayesian Approach, in the case of multilevel models, I strongly believe Bayesian Inference to be superior and less prone to overfitting and numerical instability.

Another aspect of this, is that most mixed models packages will make certain decisions for us, without our consent, which could influence our results and conclusions. As you’ll see in these tutorials, we are forced to construct the model and declare, justify every choice of prior and model structure.

Hierarchical Models case-studies

This is the end of the Module 3 (Applied Bayesian Statistics). These tools are so general and powerful, that they will last you a lifetime. However, there is so much more to learn and so many more challenges to tackle – that there is no end to the mastery of Multilevel GLMs.

- Beyond MLR, college basketball referee foul differential here

- Graduate Admissions, from McElreath, UC Berkeley in Fall 1973 (numpyro)

- Rasch item-response model for Nba fouls in crunch time, pymc

- Airbnb number of reviews

- Estimating the strength of a rugby team

- Paper investigating seat-belt use rates, with data probably taken from the department of transportation crashes website

At last, it is useful to see an example where we have an even more complicated multilevel structure, as it will be the case in some practical applications.

- Beyond MLR 3-level seed germination

Even after you learned all the technical intricacies, we’re hit again with the reality that association doesn’t imply causation. So, after being competent with this amazing tool – we have to go back to ideas from causal inference, if we want to get a more than associative insight about our theory and understanding of phenomena.

Bayesian Machine Learning and BART

As a bouns, I’m adding two flexible, general-purpose models, which you can treat as in the Machine Learning practices, but are Bayesian to the core. These are extremely useful if we care more about predictive accuracy, either for decision-making, or as a part of causal inference process, where a certain complicated relationship details aren’t important to us, but just the conditional average treatment effects.

- BART from osvaldo, on bike shares

- Podcast Episode and an R package

- Nonparametric methods - what is the benefit, name a few equivalents. Be able to explain a signed-rank transformation. This is the simplest and the most accessible explanation I know of so far.

After the first two modules, we should have a solid foundation for building more realistic models. As discussed in the introduction, randomized experiments and A/B tests have obvious limitations: might be unfeasible, unethical, or just not the right tool. Even when we design experiments or surveys, we still need to model non-response, correct for biases, and account for confounds – meaning, engage in modeling.

In my opinion, among many excellent resources, Richard McElreath’s 2023 lectures on statistical rethinking is the best way to learn the kind of modeling which fits our course and problem space.

I think that the best way to study regression and generalized linear models is to think very carefully about the causal processes underlying the phenomenon we’re studying. The big advantage of switching to a Bayesian perspective and GLMs, is that we treat all models under an unified framework. This means, we can focus on science and much less on the mathematical intricacies of advanced econometrics.

| Lecture Agenda | Keywords | Case Studies / Activities | |

|---|---|---|---|

| 1 | Introduction to Bayesian Statistics | Estimating proportions with Beta-Binomial, counts with Gamma-Poisson, defining priors | Elections, Football Goals, Speed of Light |

| 2 | Regression and the Bayesian Workflow | DAGs of influence, categorical variables, assumptions, nonlinearities, splines, simulation | E2E example on Howell’s study on height and weight. Summarizing estimands |

| 3 | Confounds. Good and Bad controls | Deep-dive on translating DAGs into statistical models. Adjustment set, backdoor criterion | E2E example of Waffle houses and divorce rates. Spurious correlations |

| ~ | Overfitting and Validation | Prior and posterior checks, LOO-CV, LOO-PIT, regularization, robust regression, simulation-based calibration | TBD |

| 4 | Multiple Groups and Hierarchical Models | Complete pooling, No pooling, Partial pooling. Post-stratification and non-representative samples | Radon concentration, Starbucks wait times, Customer LTV, Elections polls |

| 5 | Binomial GLMs | Link functions, Simpson’s paradox | 1973 Berkeley admissions |

| 6 | Poisson GLMs | Overdispersion, zero-inflated, Negative Binomial | Campus Crime, Student absence |

| 7 | Hierarchical GLMs | Deep-dive into model formulation. Studies on stage anxiety and plumonary fibrosis. | Basketball Fouls, AirBnb reviews, Seat Belts |

| 8 | Longitudinal Data | Challenges of multilevel time series | Teenage drinking, sleep study |

| ~ | Special topics | BART and Gaussian Processes | TBD |

| ~ | Special topics | Missing data and measurement error | TBD |